We are now in an era where AI does not just "learn and make decisions," but the models that make those decisions are themselves targets of attack.

In particular, the weights and biases of machine learning models are central to determining their output, and if these are tampered with, predictions can be intentionally distorted.

These model manipulation attacks (Model Manipulation / Targeted Misclassification) can be exploited to consistently misidentify specific classes or to evade detection systems.

For example, it could be used to "overlook only specific people or license plates" in surveillance cameras or OCR authentication systems.

In this article, we will demonstrate how to tamper with the final layer bias of a model and completely disable a specific class (the number "2") through the HackTheBox CTF challenge Fuel Crisis.

We will also explain the basics of AI security that can be learned in the process and key points for defense.

- The crisp typing feel that is unique to the capacitive non-contact system!

- REALFORCE's first wireless compatible device! Wired connection also available!

- Unlike the HHKB, the Japanese keyboard layout has no quirks and is easy for anyone to use!

- Equipped with a thumb wheel, horizontal scrolling is very easy!

- It also has excellent noise reduction performance, making it quiet and comfortable!

- Scrolling can be switched between high speed mode and ratchet mode!

About HackTheBox

This time, we are actually HackTheBox (HTB) to verify vulnerabilities.

HackTheBox is a hands-on CTF platform where participants can practice in a variety of security fields, including web applications, servers, and networks.

Its greatest feature is that participants can learn by actually accessing the machines and applications that will be attacked and getting their hands dirty.

the Challenge categories that was previously offered on HackTheBox, and is currently a VIP plan or higher (note that only active challenges are available to users with the free plan).

There are also machines and challenges in a variety of categories, including Web, Reversing, Pwn, and Forensics, so you can tackle them at a level that suits you.

If you want to seriously hone your skills with HackTheBox, be sure the VIP plan and take full advantage of past machines and challenges.

👉 Visit the official HackTheBox website here

👉 For detailed information on how to register for HackTheBox and the differences between plans, please click here.

Challenge Overview: Fuel Crisis

The challenge is to somehow dock the spaceship "Phalcon" with the space station B1-4S3D, which is banned from docking, while running low on fuel.

There are two OCR cameras at the station's gate, and the first camera reads the ship's ID and reliability, which the second camera re-evaluates and compares. If the two results are significantly different, the ship will be denied entry.

Fortunately, a fellow hacker has seized the second camera's model file upload privileges, and is even able to disable the verification process at the moment their ship passes by.

The player's task is to exploit this situation by tampering with the model's internal parameters so that the number "2" in their ship's ID is the only one that will ever be recognized.

point

- Target of attack: Machine learning model (Keras/h5 format) running on the second OCR camera on the space station

- Objective: Always misrecognize the number "2" and prevent your ship's ID from being detected correctly.

- Method: Set the bias value for the "2" class in the final Dense layer of the model to a large negative number (e.g., -100).

- Success condition: The other ships (4 ships) are correctly recognized, and the number "2" of your ship is not recognized, and the docking is successful.

What is Keras and h5 format?

In Fuel Crisis, we directly rewrite the bias value of the final Dense layer in the h5 file to force the output of a specific class (number "2") to be low, so that it is never recognized.

Keras

Keras is a high-level deep learning framework for Python.

It is a library that makes it easy to operate backends such as TensorFlow and Theano, and allows you to build, train, and save models using an intuitive API.

In this Fuel Crisis project, an OCR (character recognition) model was created using Keras, and pre-trained weights were distributed.

h5 format

.h5 is the extension of the data storage format called HDF5 (Hierarchical Data Format version 5).

Keras can save trained models and their weights in h5 format.

This format has a hierarchical structure and can store

the weights and biases While it is difficult to read in a text editor, you can open the contents with tools such as h5py or HDFView and edit them directly.

What does it mean to invalidate a specific class by tampering with the model?

Model tampering to disable specific classes is an attack method that rewrites the internal parameters of a trained AI model to ensure that specific classes are never predicted.

For example, in OCR or facial recognition, this can cause specific numbers or people to be consistently recognized incorrectly, evading detection.

This method the inference stage , and differs from attacks that change input data (adversarial samples) by directly manipulating the core of the model itself.

How the attack works

This attack works by intentionally manipulating the final class scores produced by a machine learning model using internal parameters.

Specifically, by setting the bias value of the final layer (such as the Dense layer) to an extremely negative number, the score for that class will always be low, and it will no longer be considered for prediction.

As other parameters remain unchanged, the overall behavior and reliability remain largely unchanged.

- Set the bias value to a large negative value → the corresponding class will always get a low score

- The accuracy of other classes is largely maintained, so tampering is not noticeable.

- In Fuel Crisis, the target class is the number "2"

Attack flow

The attack involves the following steps:

- Get the trained model file (.h5 format)

- Open the contents with h5py or HDFView and identify the bias value corresponding to "Class 2" of the final Dense layer.

- Change the bias value to an extreme negative number (e.g. -100)

- Re-upload the modified model to the system

- During inference, IDs containing "2" are always mistaken for other numbers, avoiding detection.

Conditions to be met

There are several prerequisites for this attack to be successful.

If these conditions are not met, the intended effect will not be achieved even if the attack is carried out.

- Attackers can directly retrieve and edit model files

- No tamper verification (signature or hash check) is performed when uploading a model

- Disabling a specific class does not have any noticeable effect on other behaviors.

I actually tried hacking it!

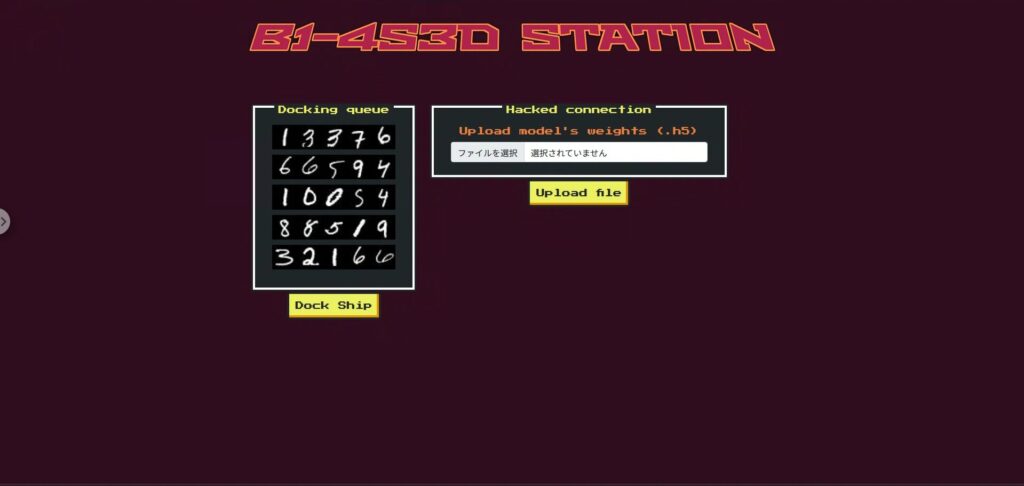

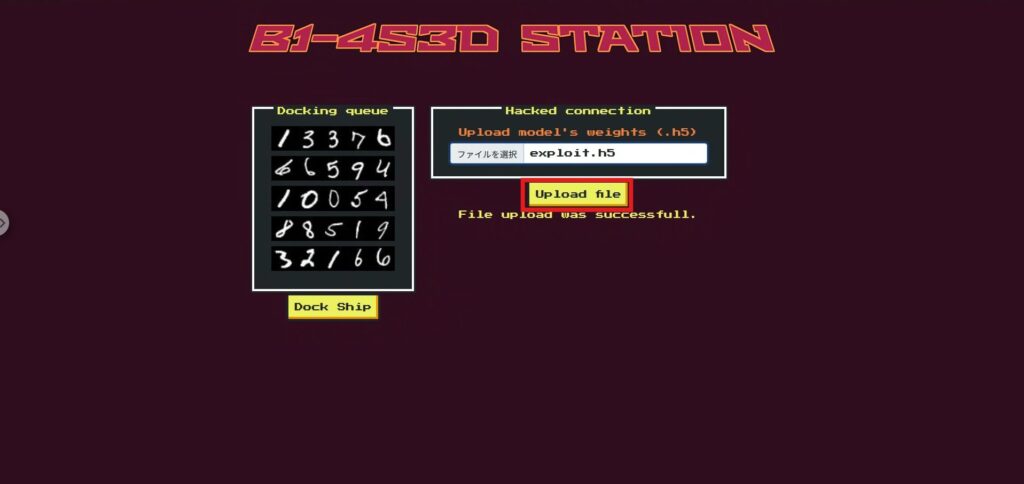

Before we get into the attack, let's first take a quick look at the Fuel Crisis web app.

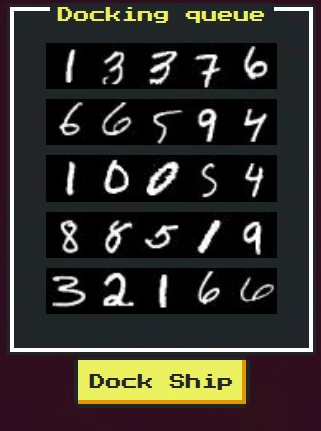

The app's UI mimics a space station docking gate, with five spaceships passing through in sequence. Each ship is assigned a ship ID (a string of numbers)

The main elements on the screen are as follows:

- Ship ID display area:

Five ship ID images are displayed. Your ship is the last to pass, and its ID contains the number "2." - Model file upload formYou

can select and upload a model file in .h5 format. This is where you will upload the tampered model. - dock buttons

are identified using an OCR model to determine whether or not docking is possible. If the tampering is successful, only your own ship will be prevented from being recognized. - Result display area:

The recognition result, reliability, and docking possibility of each ship are displayed. If successful, a flag will appear here.

Understanding how this UI works will help you follow the attack steps more smoothly.

Next, let's take a look at the code to see what is happening on this screen.

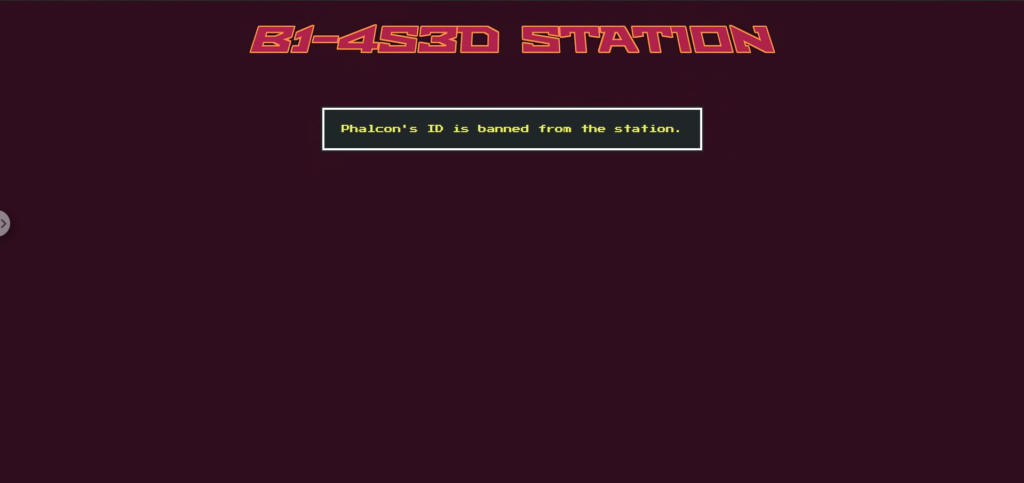

Scouting Phase: Check for banned ships and model swap mechanics

First, try pressing the Dock button and you will see a message that the only application that is prohibited is "Phalcon."

If you check the source code, you will see why. At the /dock endpoint, unlike other spaceships, Phalcon passes through the second gate with validation_check=False , which means it skips the digit reliability check when parsing the image.

Additionally, self.bannedId = “32166” is set, and if the final Phalcon ID becomes this value, an exception will be raised, forcing the user to not pass through.

@app.route('/dock', methods=['POST']) def dock(): try: for spaceship in b1_4s3d_station.spaceships: id, id_confidence = b1_4s3d_station.passFirstGate(spaceship.id_image) if spaceship.name == "Phalcon": b1_4s3d_station.passSecondGate(id, spaceship.id_image, id_confidence, validation_check=False) else: b1_4s3d_station.passSecondGate(id, spaceship.id_image, id_confidence) except DockingException as de: return render_template('dock.html', response = spaceship.name+str(de)) except Exception as e: return render_template('dock.html', response = 'Unexpected error while docking.') return render_template('dock.html', response = flag)Reconnaissance phase: The role of upload file

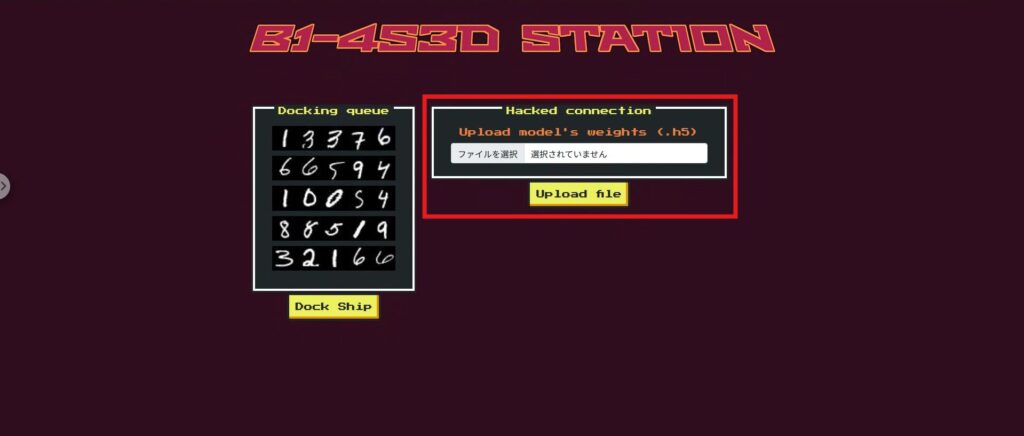

The Upload file function on the top page actually replaces the second gate model (second_gate).

This means that players can freely rewrite the decision logic of the second gate by uploading their own modified Keras .h5 model.

@app.route('/', methods=['GET', 'POST']) def index(): ids = [] for spaceship in b1_4s3d_station.spaceships: ids.append(spaceship.idToBase64()) if request.method == 'POST': if 'file' not in request.files: return render_template('index.html', ids = ids, response = "File upload failed.") file = request.files['file'] if file.filename == '': return render_template('index.html', ids = ids, response = "File upload failed.") if file and allowed_file(file.filename): try: file.save(os.path.join(app.config['UPLOAD_FOLDER'], "uploaded.h5")) b1_4s3d_station.second_gate = tf.keras.models.load_model("./application/models/uploaded.h5") except: return render_template('index.html', ids = ids, response = "File upload failed.") return render_template('index.html', ids = ids, response = "File upload was successfull.") else: return render_template('index.html', ids = ids)

Reconnaissance Phase: Which Numbers to Falsify

It's important to note that the reliability check is valid for all ships except Phalcon,

so if you make major changes to the entire model of the second gate, the other ships will no longer be able to achieve the same reliability as gate 1, causing the whole thing to fail.

Therefore, we narrow our target to the number "2," which is only found in Phalcon's ID.

By significantly lowering the reliability of "2" in the second gate model, Phalcon's final ID will become a different number string from "32166," allowing us to avoid the prohibited ID detection.

Furthermore, this change will not affect the IDs of other ships.

Reconnaissance Phase: Model (.h5)

Since this is a CTF, the model file (.h5) originally used on the server has been provided in advance.

This file is a trained neural network model saved in Keras format, and can be replaced with the model for the second gate using the upload function.

The aim of the attack is to intentionally lower the confidence (prediction score) of this model for the number 2.

This will remove the number 2 from Phalcon's ID, allowing it to avoid the banned ID 32166.

Next we'll look at how to actually load and tamper with this .h5 model.

Attack phase: Disable only the "2" on the second gate to avoid the prohibited ID

we will overwrite

only the bias element corresponding to class 2 in the final classification layer (10 classes) of the provided trained model (model.h5) -100 By directly editing the HDF5 without using Keras, we will create a replacement model (exploit.h5) while maintaining the file structure and size.

Attack script:

If you run the following as is, it will copy model.h5 to create exploit.h5 and rewrite index=2 in dense > dense > bias:0 to -100.

import shutil, h5py, numpy as np shutil.copyfile("model.h5", "exploit.h5") # Copy the original as is with h5py.File("exploit.h5", "r+") as f: ds = f["model_weights/dense/dense/bias:0"] # dense > dense > bias:0 ds[2] = np.array(-100.0, dtype=ds.dtype) # Set index=2 to -100Once exploit.h5 has been created by the script, select exploit.h5 in the Upload file section on the top page and upload it.

Next, run Dock (here, inference is performed using the model with the replaced second_gate).

Now, we can obtain the flag!

Mechanism (why does it work?)

In short, it "fixes scores of 2 to almost zero."

- the probability of "2" after softmax will be

- Phalcon skips the trust check at the second gate and only checks if the final ID is 32166

- Since "2" is not output, 32166 is not configured → It passes by bypassing the prohibited ID check.

Countermeasure: To prevent model tampering (class invalidation) from occurring in the real environment

Here, we have narrowed down to only those countermeasures that are effective in actual production. We have removed CTF-specific ones (skip verification for specific ships, /playground, etc.) and generalized them.

There aren't many apps that allow you to upload models at will, but if you're trying to upload a model, it's a good idea to be careful.

Fixed supplier (ensuring model integrity)

The models used in production will be strictly managed regarding who distributed them, when, and what, and will be designed so that they cannot be arbitrarily replaced by the app.

- No runtime replacement: Only pre-built artifacts are used in production. Arbitrary uploads via UI/API are not allowed.

- Integrity verification: SHA-256 and signatures (e.g., Ed25519) are verified at startup and periodically, and any failure results in an immediate fail-close.

- Read-only deployment: Model storage is run in a read-only mount/least privilege container.

Load hygiene (don't trust untrusted models directly)

Even if a design handles user-provided models, they cannot go into production unless they go through quarantine → conversion → isolation.

- Format and schema validation: Mechanically inspects input/output shapes, number of labels, permitted layer types, and upper parameter limits. Deviations are rejected.

- Disable dangerous deserialization: Disable custom_objects and set compile=False to avoid reading unnecessary learning information.

- Convert to safe formats: If possible, unify to formats with low code execution potential, such as ONNX / SavedModel / safetensors.

- Isolated evaluation: Import is performed in a separate process/sandbox for bench and sanity testing only. Production data and permissions are not touched.

Robustness of decision logic (resistant to tampering that "destroys specific classes")

Don't rely solely on the output of a single model. Ensure tamper resistance through consistency checks and consensus.

- Eliminate rounding comparison: Rounding to one decimal place etc. fails with small fluctuations. Two-stage check: (a) label match + (b) |p₁−p₂|≤ε.

- Separation of verification and decision: The validation model is for verification only (consistency check only), and the final decision is made by the trusted path.

- Consensus/Redundancy: Important decisions are made by consensus among multiple models or by using rules in combination, making it resistant to "class invalidation" by a single model.

- Sanity gate: Automatically checks that each class outputs a minimum amount (distribution bias, zeroing) before deployment.

Operation monitoring and alerts (early detection of abnormalities)

Ensure that tampering and deterioration can be detected and blocked through operations.

- Class distribution monitoring: Alerts when the occurrence rate of a specific class drops abnormally/becomes zero.

- Hash/Signature Log: Always record model ID, hash, signer, and version on load and notify changes.

- Protective fail: When an anomaly is detected, automatic rollback/switch to known good model/temporarily retreat to rule judgment.

Summary: BYOM design that can withstand model tampering

In this challenge, we connected a bring-your-own (BYOM) model directly to the production logic, and confirmed that by simply tampering with the bias in the output layer in one place, we could create a model that would not output "2" and bypass the prohibited ID check. While AI is intelligent, its behavior can change dramatically if the assumptions about weights and input/output are broken. This is the biggest pitfall.

This "straightforwardness" is both a strength and an opening for attackers: by tinkering with the internals of the model, they can bend the decision flow expected by the app from the outside.

That's why we treat models like code, thoroughly implement quarantine, validation, and promotion operations, and protect judgments through consistency rather than relying on rounding. AI is not omnipotent, so we need to ensure robustness through design and operation. This is the biggest lesson we learned from this experience.

Learning about how AI works from the perspective of "tricking AI" is a very practical and exciting experience.

If you're interested, we encourage you Hack the Box a try.

👉 For detailed information on how to register for HackTheBox and the differences between plans, please click here.

![[Introduction to AI Security] Disabling Specific Classes by Tampering with Models | HackTheBox Fuel Crisis Writeup](https://hack-lab-256.com/wp-content/uploads/2025/08/hack-lab-256-samnail-32.jpg)

![Account hijacking?! I actually tried out IDOR [HackTheBox Armaxis writeup]](https://hack-lab-256.com/wp-content/uploads/2025/12/hack-lab-256-samnail-300x169.png)

![[AI Security] Attacking AI-Negotiated Ransomware with Prompt Injection | HackTheBox TrynaSob Ransomware Writeup](https://hack-lab-256.com/wp-content/uploads/2025/08/hack-lab-256-samnail-31-300x169.jpg)

![[AI Security] AI Agent Hijacking Exploiting OpenAI Function Calling: Practice and Defense Strategies Explained! HackTheBox Loyalty Survey Writeup](https://hack-lab-256.com/wp-content/uploads/2025/08/hack-lab-256-samnail-30-300x169.jpg)

![[AI Security] Tricking an LLM with Prompt Injection | HackTheBox External Affairs Writeup](https://hack-lab-256.com/wp-content/uploads/2025/08/hack-lab-256-samnail-29-300x169.jpg)

![[Practical Guide] Hacking with RCE from SSTI Vulnerability on HackTheBox! Learn the Causes and Countermeasures of Vulnerabilities | Spookifier Writeup](https://hack-lab-256.com/wp-content/uploads/2025/08/hack-lab-256-samnail-28-300x169.jpg)

![[Virtual Box on Windows 10] A detailed explanation of how to install Virtual Box!](https://hack-lab-256.com/wp-content/uploads/2022/03/hack-lab-256-samnail-300x169.jpg)